Data modeling is a process of a conceptual representation of data objects. Moreover, it shows an association between data objects. Data modeling tools ensure consistency. This may happen in naming conventions, security, default values, and semantics. All of these ensure no compromising of the quality of data occurs.

Data modeling provides a visual representation of the data stored. This leads to the enforcement of business rules. Regulatory compliances are reinforced. Besides, it also strengthens government policies on data.

Here are a few Data Modelling Tools

Look into the list to learn more about the tools.

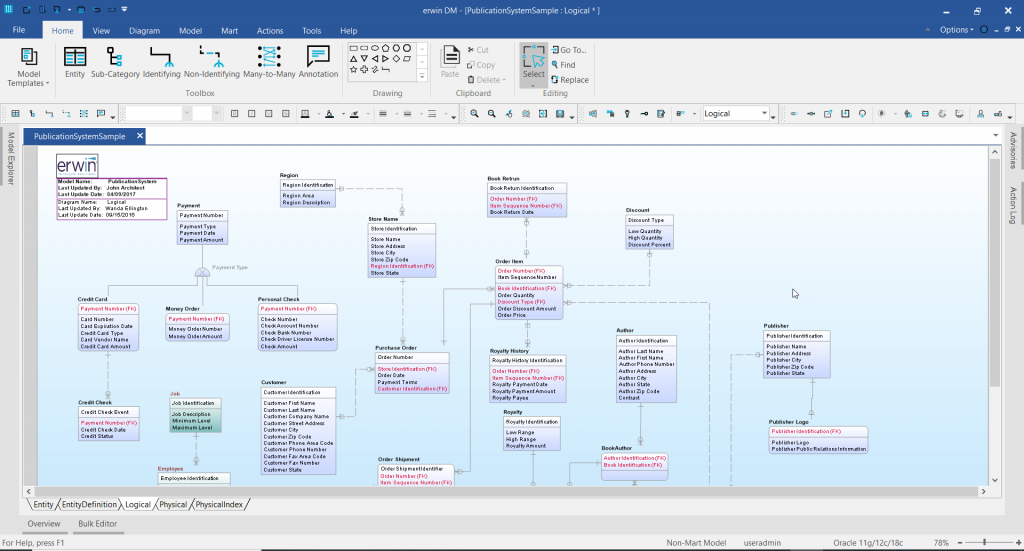

1. Erwin Data Modeler

This has been in the data modeling industry for 30 years now. This is one of the most trusted. Hence it is widely used. Top financial, IT, and healthcare companies use it. Some of the known features are as follows:

Erwin DM is flexible and customizable. The user interface is customized.

This makes data modeling jobs forward and simple. Tiring jobs are automated. Hence the functionality remains inbuilt throughout. For instance, they are setting standards, testing, deployment, and database design. Some additional features are also available—for example, model visualization. The data models are automatically generated. This eases the data modeling headache.

Erwin Data Modeler

Besides, the comparison tools are inbuilt. It allows for bidirectional data syncing. It syncs well with legacy modelers. Again, the sync is similar to existing models and databases. Erwin DM allows custom script generation. Now selective updates are possible on data models.

Next comes forward and reverse engineering. Erwin DM allows it. It integrates with NoSQL platforms. The same goes for other modeling platforms. It will ensure efficient, effective, and consistent management.

Following this comes the generation and maintenance of metadata. It enables the best data intelligence and governance. The metadata generation is a result of data modeling. It can be directly pushed to Erwin DM. It’s a hosted data catalog and business glossary.

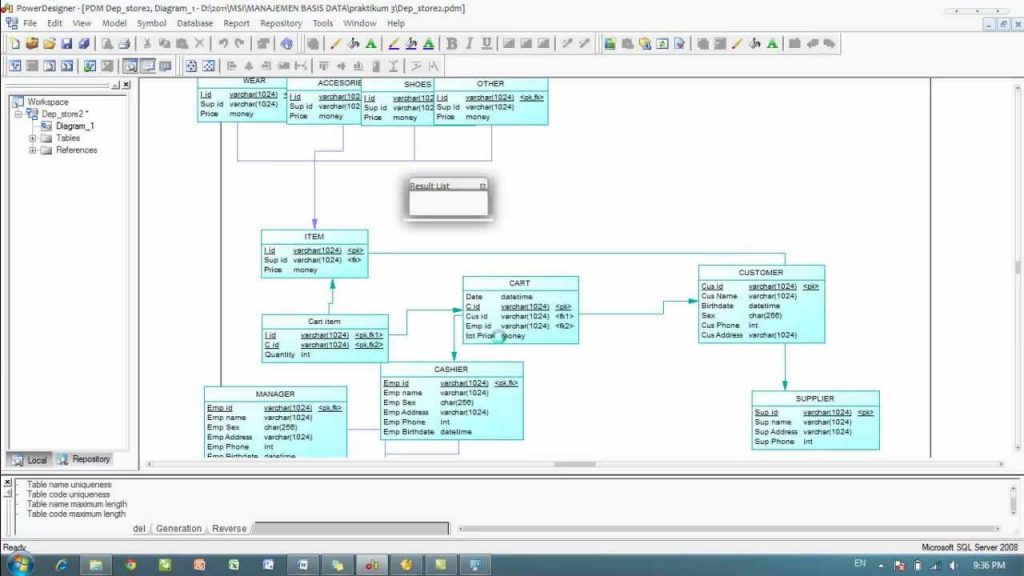

2. SAP Power designer

It has been an award winner. This is a well-known data modeling tool. It can capture, analyze, and present business data. SAP Power designer follows the best practices of the standard industry. This gives comprehensive coverage of metadata storage. You can now get a better understanding of input data. Here, it uses link and sync mechanisms.

Besides, metadata management can be used. It is usable for capturing, analyzing, and generating data insights. All these get stored in a single repository. Hence, the access is worldwide. The following scenarios may make use of the SAP Power designer.

First, you may need to share your data understanding. Now, one can share the data landscape as well. You can do so all with the entire organization. Second, you may require a standard interface. This may be between varied business processes and relics.

SAP Power designer

The third scenario involves the need for a tracking mechanism. It tracks various processes and data sharing. This can occur between multiple applications and services. It can range across the business landscape. Finally, you may need it to find critical paths. Or it can be different processes or bottlenecks in business. Some features making SAP Power designer stand out are as follows.

The interactive user face is simple and easy. This allows for understanding any dependencies. Besides, no such technicalities are encountered. You may drag and drop objects. This functionality is available across platforms. Even other tools and services may use it. It enables comprehensive report creation. Besides, it may create data dictionaries. This creates mappings and defines data.

Highly secure access to metadata is available. It automatically takes control of access. Besides, web reporting is also supported. The integration is seamless. This occurs with various services and applications. It uses link and sync technology. The entire business landscape can avail of this. It gets stored in metadata in a single repository. This has controlled access. Hence, it enhances the capability to respond to organizational changes.

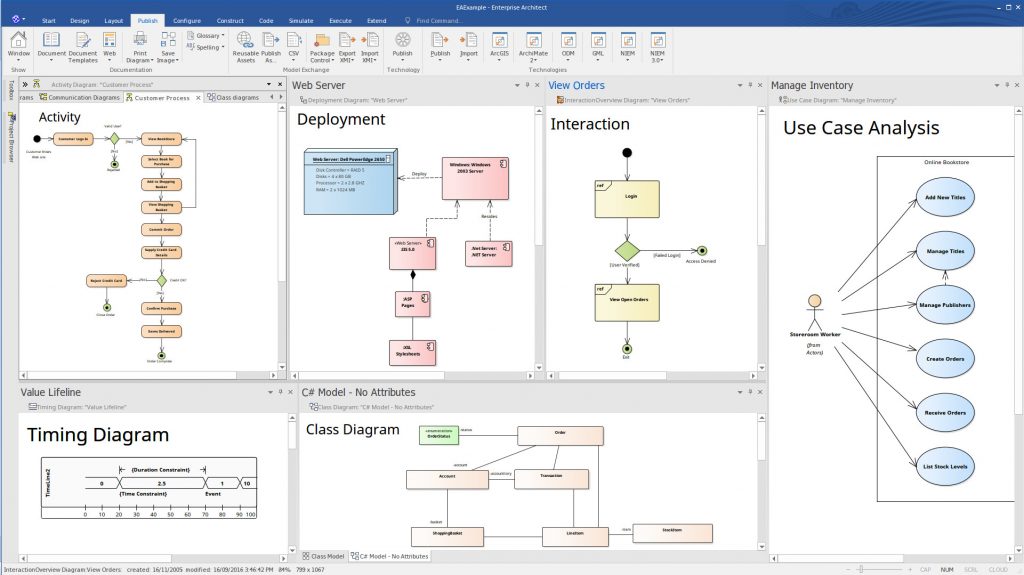

3. Enterprise Architect

This data modeling tool is available in schemes. These schemes are enterprise-wide. Lots of strategies and functionalities are present here. It allows us to visualize, analyze, test, and maintain data. Moreover, it is true for every enterprise landscape. It uses perspective based modeling. Further, it simplifies the data models.

Four variants are available. It depends on the need and landscape in use. They are as follows. First comes the professional. It suits the entry-level modeling of data. Next, corporate units teamed modeling. Next is the unified variant. It is suitable for the advanced modeling of data. Simulations can also occur with ease. Finally, the ultimate. It is a full-fledged solution. The enterprise architect data modeling tool requires it.

The first remarkable feature is manageability. It helps organizations to model and manage complex information. You may find it in any business landscape. This tool achieves data manageability in the following ways. It is diagram-based modeling.

Enterprise Architect

The model does not need to be at an organizational level. It also provides domain-specific models. Besides, the version management tracks change. The changes occur in models and databases. It accesses control management of data models. They are coming to the flexibility of Architect Enterprise. It pulls in data from various segments and enterprises.

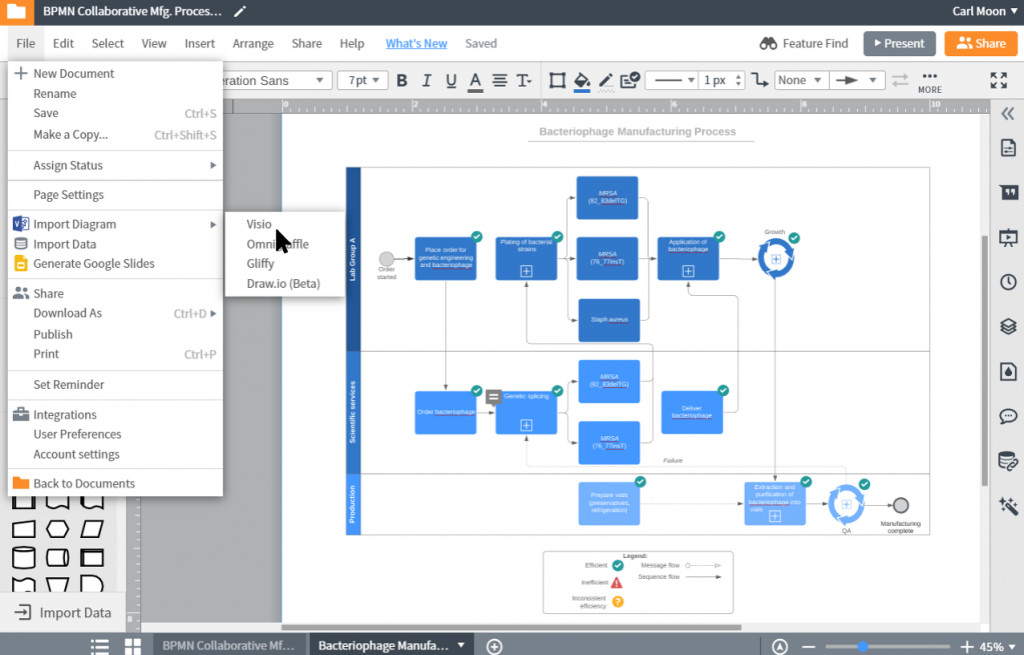

Besides, it can develop a single, unified version of the model. Enterprise Architect uses open standards. For instance, BPMN, UPL, and SysML. It also recognizes and prioritizes critical tasks. Therefore, it enhances model development over some time. It has improved test and debugs skills. This ensures better functionality of the data model. This tool can simulate data models. Hence it confirms process design. And so is the business design.

Another skilled feature is modeling. It can capture business requirements. Besides, its designs and deploys data models. This causes impact analysis. It makes changes in the structure of the database and the model itself.

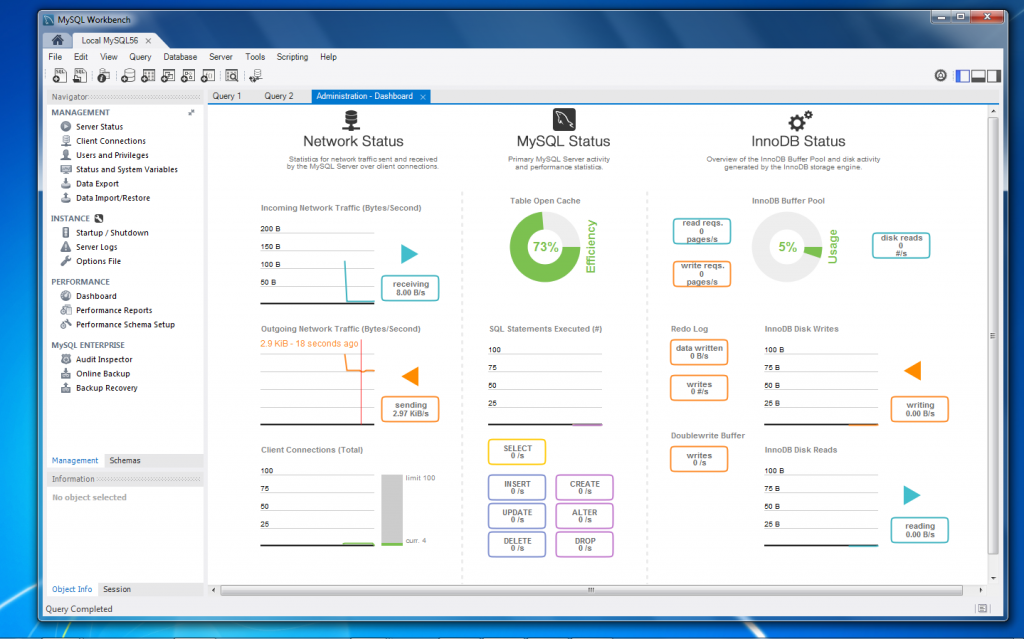

4. MYSQL Workbench

This data modeling tool is unified. Database admins and architects and developers may use it. Windows, MAC, and Linux support this tool. It allows administration, configuration, deployment, and backup. Some key features fit various exercises. Here are some of them.

First, it helps in designing a visual database. This tool provides more tools for some databases. These databases must be data-driven in designing schemes. Architects may prepare designs. They can then communicate it to shareholders. Hence immediate feedback is available from them.

Additional validations may validate data models. The proofs are as per industrial norms and standards. It ensures no errors occur during model generation. ER and block diagrams can also be made. Now bidirectional engineering may also be present. Its functionalities create physical databases. These are made for data models with simple clicks.

MYSQL Workbench

MYSQL Workbench automatically generates SQL codes. These are made for queries and syntax generation. Hence the process becomes more straightforward and more efficient. Reduction of human prone errors occurs. Architects may take existing databases. Now they may use reverse engineering. Hence, they are put into the same model. This reduces runtime error. Code debugging lessens. Besides, one may get a better understanding of database design.

It is coming to change management. This process is pretty complicated. Besides, it is lengthy and risky at the same time. This may cause changing the entire database at times. Hence, it also encompasses data model changes. The existing database is comparable to the new database. Here database designers may use this process. The changes are explicit. This saves time and effort.

Next comes the most monotonous job. It is database documentation. It consumes most of your work time. This data modeling tool provides DBDoc. It is a cool feature. This enables point and clicks documentation. Hence, data documentation becomes more lucid. It can be a text or HTML format. Do whichever is convenient for you.

5. Oracle SQL Data Developer, Modeler

This data modeling tool supports physical database design. It has a lot of functional equipment—for example, data capturing and exploring. Data management and insights from data are also available. The features are one of a kind. They are as follows.

Many data modeling tools are available for use. This tool provides some classic functionalities. It saves and exports designs from modelers. Besides design, exporting also occurs. Both oracle and non-oracle sites can recognize sported designs.

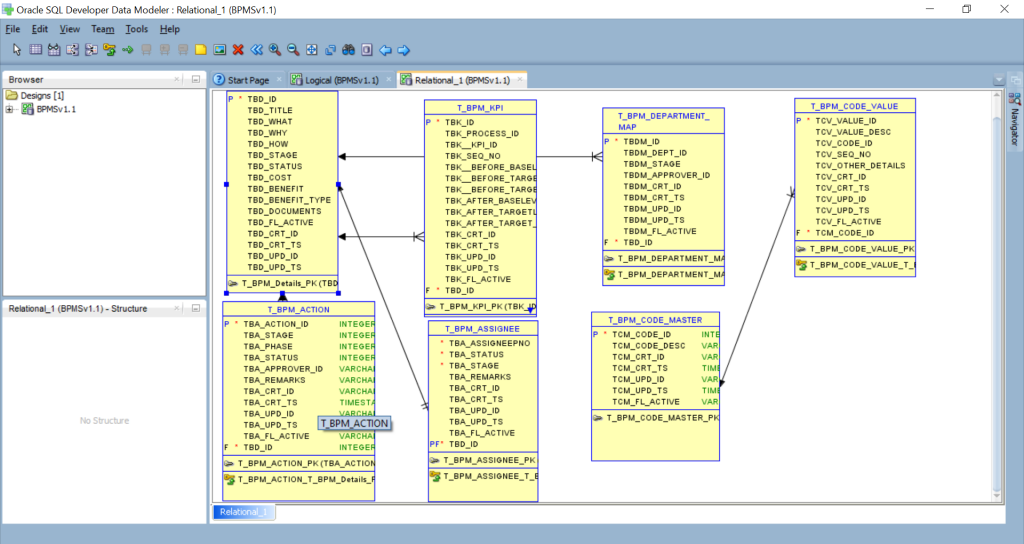

Oracle SQL Data Developer, Modeler

This tool follows open database designing. It contains a single logical model and a physical model. Besides, an optional relational model is also present. The database designs can be saved in an XML file. This ensures a fact. The design extracted can be injected into another modeler. The logical, relational, physical models are built by it. The logical one is the ER model.

The modeler combines all data definition file editors. This generates a data definition database. It is so for new and existing databases. Hence, human errors reduce. It creates data modeling reports. One can circulate this among stakeholders. They can highlight the corrections to be made. The modeler can produce reports in HTML format. Besides, unique names can be set at a report repository.

6. IBM Infosphere Data Architect

This is a data modeling tool from IBM. It is based on the Eclipse integrated data environment. This allows the creation of both logical and physical designs of the database. It can discover data patterns. Besides, data modeling and finding relations is also applicable. It also standardized interfaces between apps, servers, or existing databases.

IBM collaborations are cross-role. It has both cross-organizational and cross-departmental collaboration. Hence, it is famous in large-scale enterprises. This tool also provides visualizations for organizational data. Thus the process becomes efficient and sturdy. Therefore, less time consumption occurs. It allows the modeling of existing databases.

Data Architect determines existing data. It also plans new data are entering the organization. Hence it simplifies the understanding of dimensional data. It occurs in the following ways. It shares data with the data warehousing team. This creates warehouses in sync with data models. Next comes sharing data models with business strategy teams.

7. Microsoft Visio

Microsoft Visio is famous for computer designs. It has an intuitive graphical interface. Visualizing and modeling data has never been easier. This tool comes armed with functionalities. This enables importing data models from various software—for instance, Microsoft Excel, PowerPoint, word, etc. Visio creates a flowchart and diagrams from data models.

Microsoft Visio

This tool creates data models. Now, it enables changing them into fully functional databases. It connects to several databases. Such as SQL, MYSQL, Oracle, etc., various templates of industry-standard are available on Visio. Hence it becomes more convenient. No memory consumption is compromised. The recent release makes the visor available on iPad. In other words, it is a cross-platform availability of Visio.

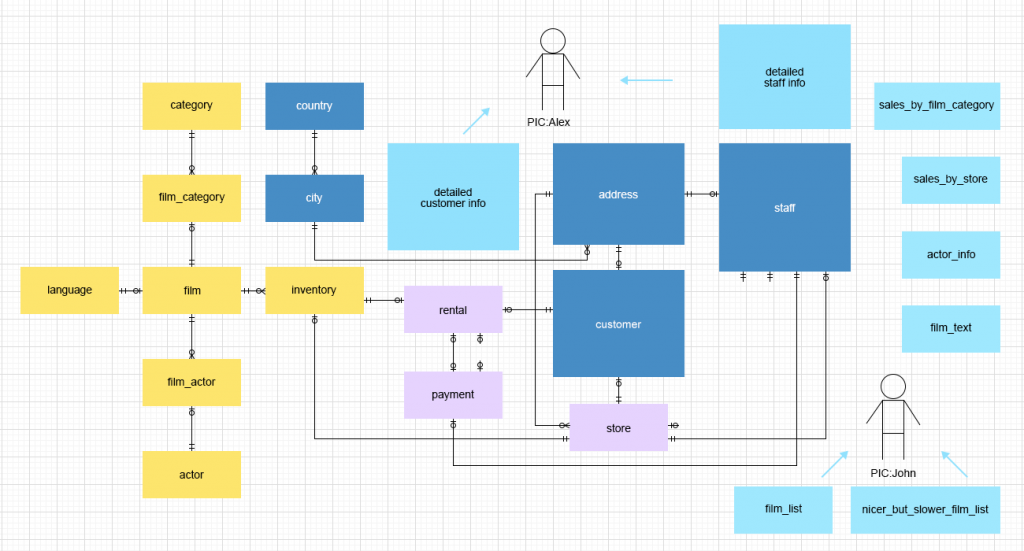

8. Navicat Data Modeler

This is one of the famous data modeling tools. It deals with physical, logical, or conceptual data models. Various operations are available. For instance, bidirectional engineering, import, and export designs. Other features include data model generation. They are made based on the industrial landscape.

Navicat Data Modeler

Navicat creates complex database objects. This occurs without writing a single code or SQL query. The model types are highly flexible. It allows viewing existing databases in diagram form. The blockage is created using reverse engineering. Hence, models become convenient and straightforward. The code generation is automatic, as well. The SQL code can be exported. This will help in creating a physical database.

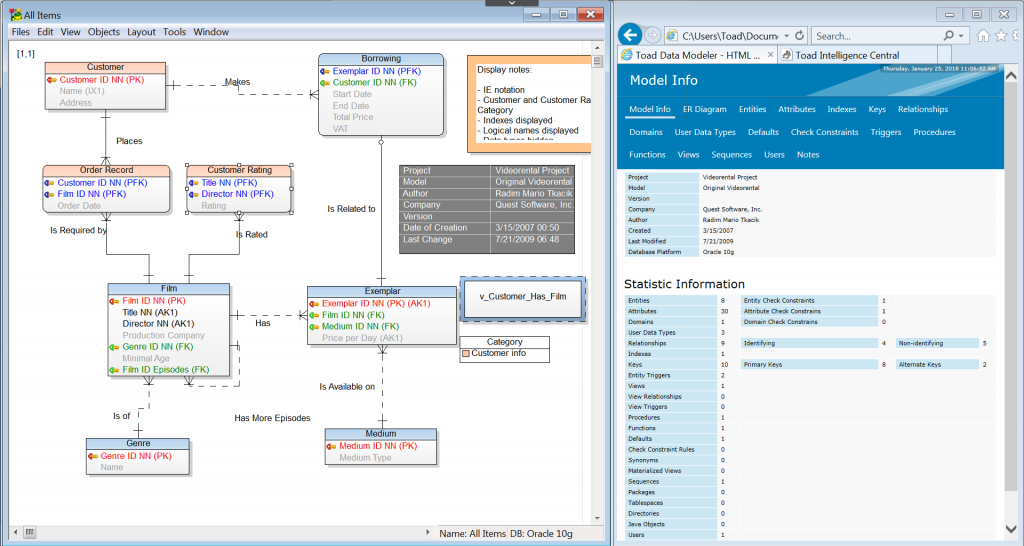

9. Toad Data Modeler

This is a database designing tool. This feature makes an outstanding data modeling tool. One can carry it visually. Besides, it requires no expertise in SQL coding. It primarily documents databases. It visualizes existing databases. Reverse engineering is the technique. This allows the process to occur. Besides, new databases are also formed. It integrates with a wide range of databases. Yes, all of them are seamless—for instance, MYSQL, SQL, etc.

Toad Data Modeler

Toad modeler generates reports. They are available in HTML and pdf formats. It also ensures security to sensitive data. Customizations are available. Here, the existing model is transformable. The only way to do it is macros and custom prints. Lastly, data migration across business landscapes is possible.

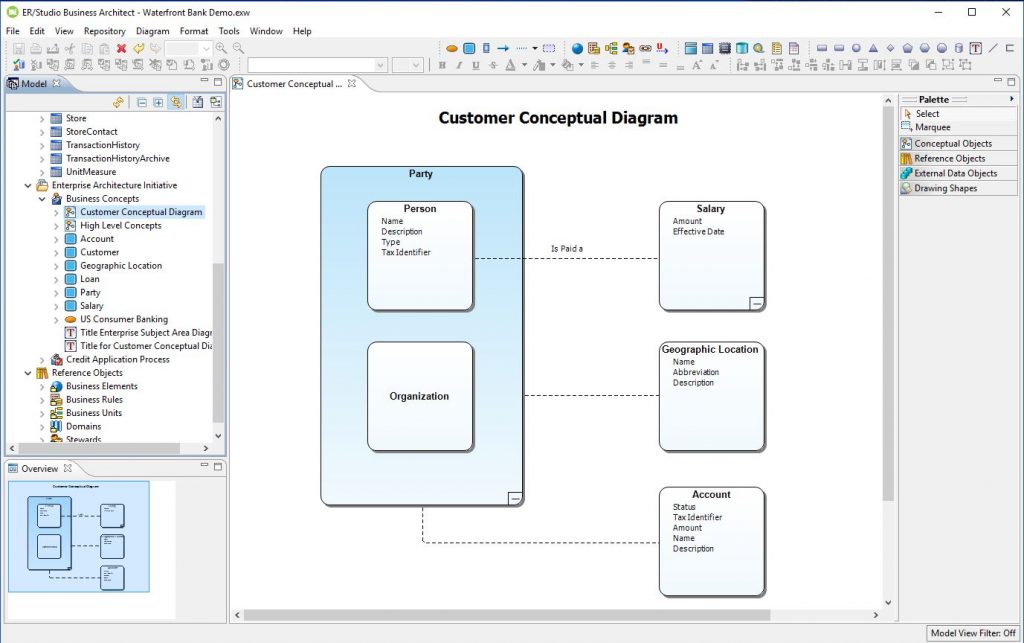

10. ER/ Studio

ER or Enterprise Data Modelling is yet another data modeling tool. This tool has left behind a positive view throughout the industry. This tool can create an enterprise data model. It maintains, generates, and represents data structures.

This relates to various data attributes—for instance, data scope, relationships, or visibility. Besides, many data are documented across multiple landscapes. The ETL process keeps track of data handling in the enterprise. It may also give an impact analysis of existing databases. Moreover, the databases, database structures, and models persist.

ER/ Studio

They are consistent across the platform. ER/Studio allows database documentation as well. This tool comes with the control feature. They can build name conventions or standards. This happens for various data entities, models.

Business data and glossaries can be built from this tool. The data models are now saved in repositories. Several metadata may need permission to access. Hence only the owner can allow it. This tool has provisions for the governance of data. It also integrates with complex enterprise software—for instance, SAP, CRM, and Salesforce.

Problems with database formation

The prime problem is during storing input in the database. You may collect data from multiple sources. That’s fine. However, you may face the following issues.

First

The reports may be incorrect. Most of the data generated are usable for data analysis. You may have a stable database like Oracle 11c. However, reports may turn out to be faulty. This may be in terms of total revenue generated or several units sold. You may have failed to check the duplicate values. Yes, this is where the blunder occurs.

Second

The search timings may be longer than expected. The size and usability do not matter here. It can search for data in databases. The design of the database may not be proper. Hence multiples tabs may interlink. Therefore, more duplicate data formation may occur. The search capacity decreases with an increase in data volume. As a result, scalability may be at risk.

Third

The data flow may be lacking. The data flow is analogous to blood flow in humans. One must troubleshoot network issues. Besides, one must write the database queries. This will clear the picture of the database for anyone. Hence, this restores the clarity of the data.

Fourth

User limitations are present. The database may lack a proper structure. Hence, it may become solvable. This will increase the data size. Besides, changing the complex business hierarchy is challenging. This would mean changing the underlying database. Hence the functionality and usage are limited.

The remedy of all these drawbacks

First

It increases the accuracy of understanding. Besides, an idea of data objects in the system is available.

Second

It provides an optimized and efficient database design.

Third

It gives an idea of tables in the database. Details about primary and foreign keys are also provided. Any constraints or checks needed are also shown.

Fourth

The data model is a blueprint of the database. This allows the formation of an actual database.

Fifth

No duplicate values are shown in a good data model. Besides, critical data is available. One can now avoid data dismissal. This will ensure no blank data values in the tables.

Sixth

Data modeling is time-consuming. It has to be in sync with business use. Thus, domain experience is necessary.

Seventh

The models may store actual physical data. Modelers may overlook this area. This happens since the concern is with data objects.

Eighth

Minute changes may cause a complete change in the database. This may compromise system availability. One may experience the systematic downfall of the application.

Data modeling is crucial to form a database. It is the sole factor determining the future of a database. Let’s get a glimpse into some data modeling tools.

Concluding

This article covers the importance of data modeling tools. Listed are some of the top picks. All the tools allow data storing to be easy. The databases can now relax. And so can you. I hope this article clears most of the doubts on data models.

No Responses